I’ve been hard at work on a new version of Onyx. I’ll write another post later explaining why I’m “starting over” and what the new goals are, as that’s not relevant to this post. Since I am starting over, though, it’s given me a good opportunity to switch to using .NET 4 and the new technologies available with it. One of the more exciting new features that I’m very excited to utilize is the new Contract system which brings Design by Contract (DbC) to the .NET world.

DbC was first implemented in the Eiffel programming language, and was discussed in length in the book Object Oriented Software Construction by Bertrand Meyer. DbC is a design and development methodology intended to try and ensure software correctness. I’ve been intrigued by DbC ever since reading about it in OOSC about 10 years ago, but since the idea really requires language support and so few languages include this support (Eiffel being the only one I’ve even written a “hello world” program in), I’ve never really had the opportunity to “use it in anger”. Now that DbC is going to be available in .NET, I can finally get some real world experience with it.

So, what is DbC?

DbC is a way to design, build, document and verify software based on the idea of contracts. In the legal world a contract is used to specify what two parties agree to exchange (goods or services). In DbC contracts are used in the same way. The consumer of a routine agrees to provide certain input, while the routine agrees to provide certain output. The stronger both sides of this contract is specified, the more robust and reusable the code will be. When the contract is codified in the software, it’s possible to use tools to statically verify the correctness of the code, to produce unit tests, and to provide runtime exceptions when the contract is violated. When the contract is well documented, it’s easier to write code that consumes it. All of this leads to higher quality code.

In DbC, contracts are codified using special assertions in a declarative fashion. Methods are decorated with two types of assertions: preconditions and postconditions. The preconditions specify what a caller is required to do, while the postconditions specify what the method will ensure at the end of the call. These assertions are specified using predicates. In .NET 4 these assertions are specified using calls to methods on the static Contract class. The Requires overloads are used to specify the preconditions, and the Ensures overloads are used to specify the postconditions.

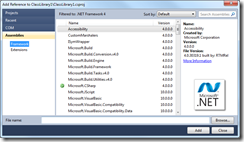

Now’s a good time for an aside. I made two claims earlier about DbC that seem to be contradicted by what I just said about how you specify assertions in .NET 4. First, I said that assertions are declarative, while the use of the Contract class appears to be imperative. Second, I said that DbC requires language support, while the Contract class appears to require only library support. I didn’t really get either of these claims wrong, it’s just that Microsoft found a very unusual way to support DbC in .NET. They use an IL rewriter to modify the binary output after it has been compiled. This allowed them to introduce DbC to all .NET languages, even those that don’t have built in support for the concept, while retaining backwards compatibility.

Because assertions are really declarative, despite the novel implementation provided in .NET 4, there’s some restrictions on how you are allowed to write them. They must be placed at the beginning of the method, and need to follow a certain order: Requires then Ensures.

OK, that covers the declarative nature, but what about the claim that language support is required? Why would language support be necessary? We’ve been using library based assertions for a very long time in nearly every language imaginable, after all.

Well, to understand this claim, you need to think about inheritance. Type inheritance obviously requires language support, and contract inheritance isn’t any different. The assertions for a base class method apply equally to overrides in a derived class, and that’s nearly impossible to achieve without language support. Well, the IL rewriter provides this same support without changing the language. Neat, huh?

We should discuss contract inheritance a bit. See overrides may not have the exact same contract as the base. However, the contract needs to be “compatible”. Thinking hard about this, we can come up with some rules. Let’s first think about the preconditions. If the preconditions are identical, we obviously have no problems. What if we add to them (making them stronger), though? This won’t work. Using polymorphism, if a derived instance is passed to a method expecting a base instance, it could violate the stronger requirements because it knows nothing about them. What if we remove some requirements (making them weaker) instead? This is fine, because when the method taking a base instance obeys the base requirements, it will still meet the derived class’s requirements.

Now, let’s think about the postconditions. What happens if we add new conditions (making them stronger)? This is fine, because we’ve still met the base requirements. What about removing conditions (making them weaker)? This doesn’t work, because we may not satisfy the base requirements.

So, in theory at least, we can weaken the preconditions, and strengthen the postconditions. However, in .NET 4 they’ve only allowed us to strengthen the postconditions. The justification they give is that “in practice” there’s rarely a need to weaken the preconditions, and supporting this concept is too complicated. I’m not sure if this justification is valid or not, as I’ve little experience with DbC, but that’s what we have.

So, now we have preconditions and postconditions. There’s a third type of assertion that’s very important: object invariants. Object invariants are assertions about the state of the object between calls to methods on it. These assertions must hold before and after every call to instance methods on the object. In .NET 4, object invariants are supported by decorating methods that specify the invariants (through calls to the Invariant method on the Contract class) with an attribute (ContractInvariantMethodAttribute).

So, there’s a lot of theory. Next, we’ll try to look at the practice of using DbC in .NET 4.